Download this week’s example code.

The Concordance

Having developed a basic understanding of the tools involved in slicing, dicing, and baking cakes from text, we move now to the second phase of the course: models of text. These models will answer one or both of the following questions: what is a text like? How can we make another text that looks just like this one?

Our first model is the concordance, which is the result of finding all of the unique tokens in a text, along with their immediate contexts. We’ll have a working program for creating a concordance at the end of this lesson, but we’re going to take baby steps to get there. Along the way, we’ll learn about two data structures (the set and the map) that will make the job easier. Our first task: extract a list of unique tokens from a text.

Unique tokens: the HashSet

The first new data structure that we’re going to add to our toolbox is the HashSet. (Java reference here.) A HashSet is works a lot like an ArrayList: you can add objects to it (with the add method) and iterate over the objects in it (in, e.g., a for loop). The two main ways that HashSets differ from ArrayLists:

- ArrayLists allow for duplicate entries. HashSets don’t. (That’s what makes it a “set” in the mathematical sense.) Adding an object to a HashSet once it’s already been added has no effect.

- ArrayLists will return your objects in the same order you put them in. HashSets don’t. Looping over a HashSet will return the objects in an arbitrary (but not random) order.

We’ll use the first property of HashSets listed above to our advantage. The following program (Unique.java) finds all unique words in the given input and prints them to output:

import java.util.HashSet;

import com.decontextualize.a2z.TextFilter;

public class Unique extends TextFilter {

public static void main(String[] args) {

new Unique().run();

}

private HashSet<String> uniqueWords = new HashSet<String>();

public void eachLine(String line) {

String[] tokens = line.split("\\W+");

for (String t: tokens) {

uniqueWords.add(t);

}

}

public void end() {

for (String word: uniqueWords) {

println(word);

}

}

}

The mechanics of this program are fairly easy to understand. Each line of text from the input is split into words, each of which is then passed to the add method of our HashSet object. Note that we don’t have to check to see if a word is already in the HashSet—the HashSet object does that for us, and will simply ignore any request to add a word that’s already in there. Then, in the end function, we print out every word we’ve stored in the HashSet (using the same syntax that we used to iterate over every item in an array or ArrayList).

Given an input like:

Paris Paris Paris Paris in in in the the spring

… we would expect output like:

Paris in the spring

(but not necessarily in that order! see below)

HashSet: the gritty details

So how does the HashSet work? How can it tell whether or not something has already been put into it? Here’s the basic gist, leaving aside some of the frothy complications. (See the Wikipedia page for a more detailed explanation.)

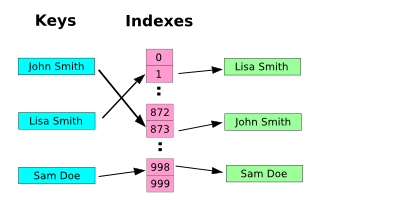

hashset diagram

(adapted from Wikipedia)

Every object that you put into a HashMap is first sent through a hashing algorithm. The point of this algorithm is to generate a unique number (a “hash”) for every object that you pass to it. In the example above, the hashing algorithm returns the number 873 for string “John Smith”; the number 1 for the string “Lisa Smith”; and the number 998 for the string “Sam Doe.” These numbers are then used as an index to an array. When you call the add method of a HashSet object, whatever you passed to it gets stored in that array at the index returned by the hashing algorithm. When you ask the HashSet (with its contains method) if a particular object is present, it calculates the hash for that object, looks it up in the array, then returns true if something’s there (and false otherwise).

Now we can explain why the HashSet doesn’t return objects in the same order they were put in: the HashSet doesn’t have the means to remember the order. When you iterate over the HashSet (using, e.g., a for loop), it returns objects in the order of their hash—that’s the only order the HashSet knows.

“But Adam,” you say. “Couldn’t I do all this with an ArrayList?”

You could! It is, of course, possible to check an ArrayList for duplicates, and indeed the ArrayList class provides a method to do just such a thing–contains. We can easily imagine what the code for such a function might look like:

boolean contains(ArrayList<String> alist, String key) {

for (String item: alist) {

if (item.equals(key)) {

return true;

}

}

return false;

}

The problem with this function is easy to spot: in order to see if key is in alist, we potentially have to look at every single item in alist. If we’re checking for a duplicate entry in a large ArrayList, this can slow a program down.

If we use a HashSet, on the other hand, we only need to perform a single check to determine if an object is already present in the HashSet: just look at the index associated with that object’s hash value. For large amounts of data, this is much, much faster.

Aside: Hashing algorithms

From a conceptual perspective, a hashing algorithm is a simple thing: we can think of all kinds of ways to turn (e.g.) a string into a number. We could add together the ordinal value of the characters in the string, for example (‘a’ = 1, ‘b’ = 2 …), or assign each word to its order in the dictionary (“aardvark” = 0, …, “zyzzyva” = 204,254).

It turns out, however, that designing a hashing algorithm suitable for storing data is fairly difficult. The ideal algorithm needs to return numbers that are unique for every input—even similar strings should return different numbers—and should provide values that are uniformly distributed over a given range. This is the kind of stuff that people get Computer Science degrees to understand (though the Wikipedia page on hashing algorithms is a good introduction). Thankfully, we don’t need to bother with the details, because Java’s taking care of us. All of the hash value calculations are abstracted away in the HashSet class.

Word count: the HashMap

The next step in building our concordance program is to count how many times a particular word appears in the input. In order to do this, we’re going to use another Java data structure: the HashMap. (Detailed documentation here.)

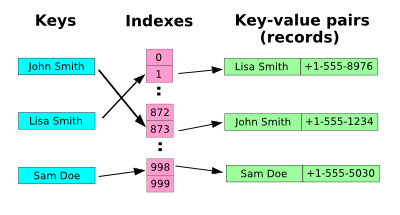

You can think of a HashMap as a kind of HashSet that contains not just a set of unique keys, but also a series of values associated with those keys. Schematically, it looks like this:

hashmap diagram

This diagram looks a lot like the HashSet diagram above, but look closely—the HashMap stores not just the key, but a value associated with that key. The data structure is called a HashMap because it uses a hashing algorithm to “map” a key to a value. In the case of our program that counts the number of times unique words occur in a text, we’re mapping strings (words) to integers (the number of times that word occurs). Let’s look at the source code for WordCount.java:

import java.util.HashMap;

import com.decontextualize.a2z.TextFilter;

public class WordCount extends TextFilter {

public static void main(String[] args) {

new WordCount().run();

}

HashMap<String, Integer> words = new HashMap<String, Integer>();

public void eachLine(String line) {

String[] tokens = line.split("\\W+");

for (String t: tokens) {

if (words.containsKey(t)) {

int currentCount = words.get(t);

words.put(t, currentCount + 1);

}

else {

words.put(t, 1);

}

}

}

public void end() {

for (String key: words.keySet()) {

println(key + ":" + words.get(key));

}

}

}

The basic shell of this program should look familiar. We’re examining each line of the input, and breaking each line up into its component words. Once we’ve done that, we loop over each of the words that we’ve extracted, using the containsKey method of the words HashMap to determine whether or not that word has already been stored. If it has, we use the get method of the HashMap to get the current count associated with that word, then use put to store a new value for that word (in this case, the previous value plus one). If the HashMap doesn’t already contain the word in question, we use put to store that word, along with the value one (at this point, we’ve seen that word exactly once).

In the end method, the HashMap’s keySet returns the keys of the HashMap, which we then iterate over, printing out the key and the number associated with that key. Given genesis.txt as input, we might expect output like the following:

fly:1 let:6 God:32 tree:4 multiply:3 fill:1 blessed:2 after:11 open:1 firmament:9 (etc.)

Remember: because a hashing algorithm is involved, the order in which these lines are displayed is unpredictable!

HashMaps: What goes in

You might have noticed that the declaration of the HashMap in the above example is a little unfamiliar. We’re used to specifying what type of object will go into our ArrayLists, and we saw earlier how to specify what types of objects will go into our HashSets. It turns out that with HashMaps, we have to declare not just what type of object will be used as keys, but also what type of objects will be used as values. You saw the syntax for how to do that above, but I’ll repeat it here:

HashMap<String, Integer> foo = new HashMap<String, Integer>();

In this example, the keys of the HashMap will all be Strings, and the values of the HashMap will all be Integers. (We can actually use objects of any class as keys or values—the full Concordance example uses a user-defined class in the values slot. Using a user-defined class as a key is a bit trickier, so we’ll mostly be using strings in our in-class examples. If you’re interested in the nitty-gritty, here’s a good starting point.)

Of ints and Integers

One final clarification on the HashMap example above. Why does it say Integer instead of int? In order to understand the reason for this, you’ll need to understand the difference between Java objects and Java primitives.

It’s often said of Java that it’s an “object-oriented language” and that in Java “everything is a class.” But many of the most basic data types in Java—int, float, and boolean, among others—aren’t objects at all: they don’t belong to a class and you can’t call methods on them. Variables of these types are called primitives. There are a number of distinctions between objects and primitives, most of which Java takes care of behind the scenes.

It turns out, however, that Java collections (i.e., ArrayLists, HashMaps, HashSets and the like) can only contain objects, and HashMaps in particular can only use objects as keys. In order to store a primitive value in a collection, we have to use a “wrapper” class to create an object that contains a primitive value, but looks to Java as though it’s an object. The wrapper class for int is called Integer; the wrapper class for boolean is called Boolean; the wrapper class for float is called Float, and so forth.

Fortunately, when using methods like get, add and put, Java will automatically perform the conversion between the primitive value and the wrapper object. (For the curious, this process is called autoboxing.) So the only place you need to remember the difference between primitives and wrapper objects is in the (e.g.) HashMap declaration. You must write:

HashMap<String, Integer> foo

but never

HashMap<String, int> foo

HashMaps vs. ArrayLists

One thing that you might have noticed is that both HashMaps and ArrayLists support a get method—but what you pass to the get method is different. An ArrayList takes a numerical index, while a HashMap takes a key of type String (or, actually, an object of any type). So one way to think about the HashMap is that it’s really just a kind of ArrayList that uses strings (or, actually, objects of any type) as indices, rather than integers.

Many programming languages have a structure similar to the HashMap. In PHP, the analogous data structre is the associative array; in Perl it’s the hash, and in Python it’s the dictionary.

The Concordance, a Complete Example

Example code for a complete implementation of the Concordance can be found here.

An adventure in visualization: using TextFilter classes with Processing

The TextFilter class was designed to make it easy to write Java programs that get their input and output from the UNIX command line. But with a little bit of work, you can use that same code in a Processing sketch. If you’ve written a class that extends TextFilter (“a TextFilter class” in the instructions below), you can bring it into a Processing sketch as an extra tab. Here’s how. (Note that these instructions will only work with Processing 1.0 or later—earlier versions aren’t guaranteed to use Java 5, which is required for a lot of the stuff we’re doing in this class.)

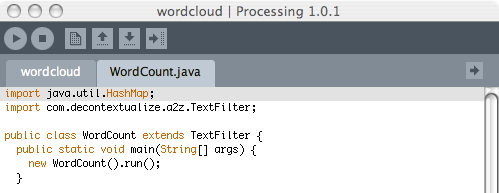

1. Create a Processing sketch, and add a new tab. (The “New Tab” menu item can be accessed by clicking on the right-most icon in the Processing toolbar.) Your new tab should have the same name as your TextFilter class source code (e.g., WordCount.java). The .java at the end is important: it tells Processing to suspend its normal rules for parsing code and include the contents of the tab in question as a regular Java class.

2. Cut and paste your TextFilter class into the new tab. Your Processing window should look something like this:

Processing/TextFilter example screenshot

3. Add a2z.jar to your sketch. The a2z.jar file contains the definition of the TextFilter class, so it’s important that Processing can find it. To add the jar to your sketch, go to Sketch > Add File. (The .jar will end up in a subfolder of your sketch called “code.”)

4. Add the text file that you want to work with to your sketch. Processing can’t read from the UNIX command-line input; it needs to read from a file. In order to access a file, it must be added to the sketch. Use Sketch > Add File to add the file to your sketch.

5. Use your TextFilter class to munge some text. The best place to do this is in your Processing sketch’s setup method. The main difference between using your TextFilter class on the command-line and in a Processing sketch is that instead of calling run on your newly instantiated TextFilter object, you’ll call either collectString or collectLines.

Why is that? The run method of the TextFilter object is specifically designed to read from input at the command line, and print output to the command line. Processing sketches don’t read or write from the command line, so you can’t use this method in a Processing sketch! The collectLines method, on the other hand, collects all of the output from your TextFilter object and, instead of printing it out, returns it as an array of strings; the collectString method collects all of the output and returns it as a big, long string. You’ll need to use one of these two methods to use your TextFilter class in Processing. Here’s the setup function from the “wordcloud” sketch, included in this week’s example code:

String[] lines;

PFont font;

void setup() {

size(500, 500);

font = createFont("Arial", 72);

lines = new WordCount().collectLines(createReader("genesis.txt"));

}

The createReader function is a Processing built-in that returns a reference to a file in your sketch that TextFilter understands. (This function means that your TextFilter class will work when it’s run as a standalone application or if it’s run on the web.) The lines array will have the exact same contents as the output of the WordCount.java program when run from the command line, one array index per line.

Reading for next week

- Hartman, C. Travesty. (Excerpted from Virtual Muse: Experiments in Computer Poetry)

- Dean, J. “Tag clouds and the decline of symbolic efficiency.”

- Bonus addition! BLDGBLOG on word-frequency clouds.

Homework

(due 24 feb)

Modify, augment, or replace one of the in-class examples. A few ideas, in order of increasing complexity:

- Make Unique.java insensitive to case (i.e., “Foo” and “foo” should not count as different words).

- Modify WordCount.java to count something other than just words (e.g., particular characters, bigrams, co-occurrences of words, etc.).

- Using the wordcloud sketch as a model, create a Processing sketch that correlates a word and its frequency in a text with visual output in a more interesting way—something other than (for example) a greater frequency leading to bigger text.

- Modify ConcordanceFilter.java to output only n characters of context surrounding a word. (You could implement this either as a change to how ConcordanceFilter.java displays context, or in how Concordance.java saves context.)

- Modify ConcordanceFilter.java to display only the lines where one word co-occurs with another (e.g., all lines where “cat” appears “dog”). Your implementation could hard-code the search terms, or you could accept search terms on the command line.

- (difficult!) Investigate Java’s Collections class. See if you can figure out how to use

Collections.sort()to sort the output of ConcordanceFilter.java—first in alphabetical order, then ordered by word count. (See the official Sun tutorial.)

Reply

You must be logged in to post a comment.

2 comments

Comments feed for this article

Trackback link: http://www.decontextualize.com/teaching/a2z/flight-of-the-concordance/trackback/