Recursion

In programming, recursion is the term for when a function calls itself. It’s useful when we’re working with problems that can be defined in terms of themselves, and when working with nested data structures.

The classic example is the Fibonacci sequence: The first two fibonacci numbers are 0 and 1, and each following number is the sum of the previous two. (1, 1, 2, 3, 5, 8, 13, 21, …).

>>> def fib(n): ... if n == 0 or n == 1: # the "base case," prevents infinite recursion ... return 1 ... else: ... return fib(n-1) + fib(n-2) ... >>> fib(6) 13

The “base case” in the function above prevents infinite recursion. A recursive function must, under some circumstance, return from the function without calling itself; otherwise, the recursion would continue forever.

It’s true that we could use also solve this problem without using a recursion (using, say, a while loop), but the recursive solution is more elegant and readable.

Exercise: The factorial of a number n (n!) is the product of all positive integers less than or equal to n. Write a recursive function that calculates the factorial of a number. (Don’t forget your base case!)

Traversing a nested data structure with recursion

The most common practical problem solved with recursion is traversing a nested data structure. The following program (extract_strings.py) is an example of such a program. It uses BeautifulSoup to visit every tag in an HTML document and print out only the contents of those tags—in essence, extracting only strings from the page.

import urllib

import sys

from BeautifulSoup import BeautifulSoup, Tag

def extract_strings(elem):

# Tag objects have a contents attribute, which gives us all of its children

# tags

if isinstance(elem, Tag):

# call this function for each child element

for next_elem in elem.contents:

extract_strings(next_elem)

# if it's not a Tag, then it's probably something we can print out; we can't

# recurse further, so return after we've printed

else:

elem = elem.strip()

if len(elem) > 0:

print elem.encode('ascii', 'ignore')

return

url = sys.argv[1]

data = urllib.urlopen(url).read()

soup = BeautifulSoup(data)

extract_strings(soup)

The trick to this program is knowing how the Tag class works in Beautiful Soup. Tag objects have an attribute called contents, which is a list of all elements that are direct descendants of that tag in the document. Some of the elements of contents are themselves Tag objects; others are just strings, which don’t have any descendents in the document hierarchy.

Starting with a given element (whether Tag or string), the extract_strings function first checks to see whether the object is a Tag object; if so, it calls itself on each element of the Tag object’s contents list. If it’s not a Tag object, it must be a string, and so we print it out. Each successive call to extract_strings will dig deeper into the document, until eventually we’ve found all of the document’s strings.

Exercise: There are some tags, like script, whose content we may not want to extract. Modify this program to not extract strings from script tags or any tags beneath script in the document hierarchy.

Context-Free Grammars

From Markov to Chomsky: where n-gram analysis fails

What aspects of the syntax of human language does n-gram analysis not capture? Some are easy to catch, like these snippets from the output of a Markov chain algorithm (an order 4, character-level, source text The Waste Land):

And I ready, and gramophone.)

“What four army faces sings, slimy belly on the Metropole.

Anyone who has generated character-level texts with a Markov chain will recognize this problem: because an n-gram analysis only takes into account the immediate context of a word/character/token, there’s no way for it to recognize the fact that a quotation mark or parenthesis is occurring without its pair.

The same problem is demonstrated below, but in a slightly different way:

- That dog laughed.

- That dog over there laughed.

- That dog with the smelly ears laughed.

- That dog I told you about yesterday—the insolent one—laughed.

A human being can understand the structure of these sentences, and understand what the sentences mean. They’re all about laughing, and there’s a dog that’s doing the laughing.

But an n-gram analysis of these sentences won’t be able to tell us these (seemingly) simple facts: it can only tell us which words occur in which order. A partial word-level order 3 n-gram analysis of the sentences given above would yield the following:

that dog laughed over there laughed smelly ears laughed insolent one laughed

If you tried to figure out the text’s meaning based purely on n-gram analysis, you’d come up with some pretty strange conclusions: the dog only shows up once, and the other n-grams seem to indicate that “over there,” “smelly ears” and some mysterious “insolent one” is doing the laughing the rest of the time.

Context-Free Grammars

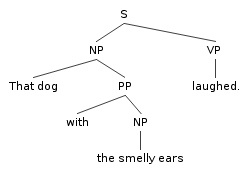

From the examples above, we can see it takes more than just a knowledge of how words occur in sequence to understand (and generate) human language. More specifically, language has a hierarchical organization: sentences are composed of clauses, which themselves might be composed of clauses. A sentence, for example, is composed of a noun phrase and a verb phrase, and noun phrases and verb phrases can have their own internal structure, even containing other noun phrases or verb phrases. Linguists illustrate this kind of structure with tree diagrams:

syntax tree

(generate your own tree diagrams with phpSyntaxTree)

Clearly, we need a model that captures the hierarchical and recursive nature of syntax. One such model is the context-free grammar.

A grammar is a set of rules. A context-free grammar defines rules in a particular way: as a series of productions. Our goal is to use these rules to generate sentences that have the structure of English. Here’s a mini-grammar of English that we can use for our initial experiments:

S -> NP VP NP -> the N VP -> V VP -> V NP N -> amoeba N -> dichotomy N -> seagull V -> shook V -> escaped V -> changed

Key: NP = noun phrase; VP = verb phrase; S = sentence; N = noun; V = verb

Each rule specifies a “expansion”: it states that the symbol on the left side of the arrow, whenever it occurs in the process of producing a sentence, can be rewritten with the series of symbols on the right side of the arrow. Symbols to the left of the arrow are non-terminal; symbols to the right side of the arrow can be a mix of terminal and non-terminal. (“Terminal” here simply means that there is no rule that specifies how that symbol should be re-written.)

A symbol that appears on the left side more than once (like VP, N and V above) can be rewritten with the right side of any of the given rules. We’ll sometimes write such rules like this, for convenience, but it has the same meaning as the three N rules above:

N -> amoeba | dichotomy | seagull

Generating sentences

Okay! So let’s generate a simple sentence from our grammar. We have to choose a non-terminal symbol to start the process: this symbol is called the axiom, and it’s usually S (or whatever the “top level” rule is).

After we choose the axiom, the process for generating a symbol is simple. The process can be described like this:

- Replace each non-terminal symbol with an expansion of that symbol.

- If the expansion of a symbol itself contains non-terminal symbols, replace each of those symbols with their expansions.

- When there are no non-terminal symbols left, stop.

For this example, we’ll use S as our axiom, and stipulate that whenever we encounter a non-terminal symbol with more than one production rule, we’ll chose among those options randomly. Here’s what the generation might look like:

S(apply rule: S -> NP VP)NP VP(apply rule: NP -> the N)the N VP(apply rule: N -> amoeba)the amoeba VP(apply rule: VP -> V)the amoeba V(apply rule: V -> escaped)the amoeba escaped

This isn’t a very sophisticated example, true, but the machinery is in place for producing very complex sentences. Of course, no one wants to do all this by hand, so let’s look at a program written in Java that automates the process of generating sentences from context-free grammars.

context_free.py

After instantiating the object, we add rules to the grammar by calling the add_rule method, whose first argument is a non-terminal symbol and whose second argument is a possible expansion for that symbol. The get_expansion method takes the axiom, and then “expands” that axiom out into a full sentence, following a set of steps very similar to the one we followed above. The above grammar, for example, will create output that looks like this:

the cat lusts after the weinermobile

Here’s the code:

class ContextFree(object):

def __init__(self):

self.rules = dict()

self.expansion = list()

# rules are stored in self.rules, a dictionary; the rules themselves are

# lists of expansions (which themselves are lists)

def add_rule(self, rule, expansion):

if rule in self.rules:

self.rules[rule].append(expansion)

else:

self.rules[rule] = [expansion]

def expand(self, start):

from random import choice

# if the starting rule was in our set of rules, then we can expand it

if start in self.rules:

possible_expansions = self.rules[start]

# grab one possible expansion

random_expansion = choice(possible_expansions)

# call this method again with the current element of the expansion

for elem in random_expansion:

self.expand(elem)

else:

# if the rule wasn't found, then it's a terminal: simply append the

# string to the expansion

self.expansion.append(start)

# utility method to run the expand method and return the results

def get_expansion(self, axiom):

self.expand(axiom)

return self.expansion

if __name__ == '__main__':

cfree = ContextFree()

cfree.add_rule('S', ['NP', 'VP'])

cfree.add_rule('NP', ['the', 'N'])

cfree.add_rule('N', ['cat'])

cfree.add_rule('N', ['dog'])

cfree.add_rule('N', ['weinermobile'])

cfree.add_rule('N', ['duchess'])

cfree.add_rule('VP', ['V', 'the', 'N'])

cfree.add_rule('V', ['sees'])

cfree.add_rule('V', ['chases'])

cfree.add_rule('V', ['lusts after'])

cfree.add_rule('V', ['blames'])

expansion = cfree.get_expansion('S')

print ' '.join(expansion)

Let’s break it down, piece by piece.

Keeping track of the rules

self.ngrams is a dictionary, with rules (as strings) as keys, and possible expansions of those rules as lists of tuples (as values). The add_rule method provides an easy interface to this dictionary.

Recursive expansion

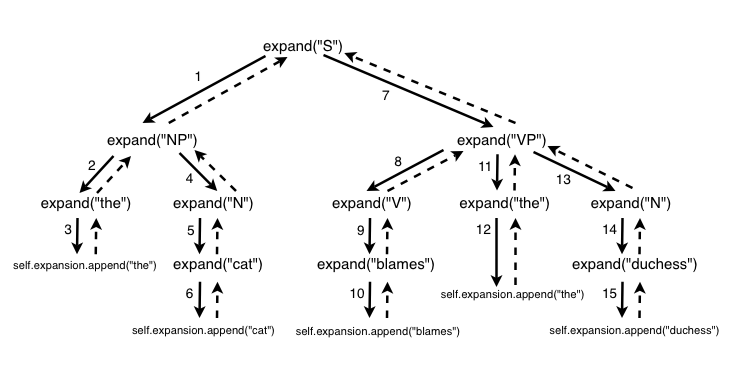

The expand method of the ContextFree class is where the heavy work gets done: taking an axiom and turning it into a full sentence.

terminal/non-terminal

Here’s where it gets tricky

The expand method then loops over every element in random_expansion and calls itself to expand that symbol. This is called recursion: when a function calls itself. Recursion is a handy tool in programming, especially for tasks that are self-similar—such as generating sentences from a context-free grammar.

The idea here is that the expansion of one symbol—say, S—will set off a chain reaction of expansions of other symbols, until there are no more symbols to be expanded. A call to expand("S") will itself call expand("NP") and expand("VP"); a call to expand("NP") will itself call expand("the") and expand("N"); and so forth.

The diagram below illustrates the whole process (again, using our tiny sample grammar as an example). Solid arrows indicate a call to expand, dashed arrows indicate a return from expand, and the numbers indicate in which order the calls are performed.

The order of calls is important. The expand method expands the left-most symbol to its full depth (e.g., all the way down to self.expansion.append()) before it moves on to any more symbols.

Infinite recursion

The astute reader will notice that there’s the potential here for an infinite loop. Let’s say that the only expansion for the symbol NP looked like this:

NP -> NP or NP

Each attempt to expand NP would lead to another attempt to expand NP, which would lead to another attempt to expand NP, and so forth. The expansion would never complete, and Java would eventually complain that it had run out of memory.

One way to ensure that your context-free grammars will always completely expand is to check to make sure that every symbol has at least one expansion that will eventually lead to terminal symbols. For example, if we added the following rule to our grammar, we’d be okay:

NP -> the dog

(Of course, this grammar could potentially generate extraordinarily long strings, like the dog or the dog or the dog or the dog or the dog or the dog .... But it will eventually finish.)

context_free_reader.py

Okay, so the ContextFree class has functions to add rules to a grammar and to generate sentences from that grammar. So far, though, we’ve had to specify those rules inside the program, calling add_rule by hand. “It would be nice,” I hear you say, “if we could specify the grammar in a separate file, then write a program to read that file in and build the ContextFree object accordingly. That way, we can make changes to the grammar without recompiling our program.”

Well, you’re in luck! The ContextFilter class does just that. It’s a (very) simple example of a parser: a program that reads a file and builds some sort of data structure from its contents. In this case, we’re building a parser for a specification of a context-free grammar. There’s nothing special about the format I chose for this file—it’s not an accepted standard for specifying CFGs—but it’s feature complete and fairly easy to parse. Here’s test.grammar from this week’s example code:

# clauses S -> NP VP S -> Interj NP VP NP -> Det N NP -> Det N that VP NP -> Det Adj N VP -> Vtrans NP # transitive verbs have direct objects VP -> Vintr # intransitive verbs have no direct object # terminals Interj -> oh, | my, | wow, | damn, Det -> this | that | the N -> amoeba | dichotomy | seagull | trombone | corsage | restaurant | suburb Adj -> bald | smug | important | tame | overstaffed | luxurious | blue Vtrans -> computes | examines | foregrounds | prefers | interprets | spins Vintr -> coughs | daydreams | whines | slobbers | vocalizes | sneezes

Each line in the file specifies a rule. The non-terminal symbol is on the left-hand side of the string “->” and the expansion is on the right-hand side. If a non-terminal symbol has multiple expansions, those expansions can either be specified on separate lines (as with NP), or on the same line, using the pipe character (|) as a separator. White space around -> and | is ignored. Grammar files can also have comments: anything following the # character will be ignored, up until the end of the line.

We’re going to implement our parser for this class by subclassing the ContextFree class, adding a method (parse_from_file) to read the grammar from a file. Here’s the class definition and some test code:

import context_free

class ContextFreeReader(context_free.ContextFree):

def parse_from_file(self, file_obj):

import re

# rules are stored in the given file in the following format:

# Rule -> a | a b c | b c d

# ... which will be translated to:

# self.add_rule('Rule', ['a'])

# self.add_rule('Rule', ['a', 'b', 'c'])

# self.add_rule('Rule', ['b', 'c', 'd'])

for line in file_obj:

line = re.sub(r"#.*$", "", line) # get rid of comments

line = line.strip() # strip any remaining white space

match_obj = re.search(r"(\w+) *-> *(.*)", line)

if match_obj:

rule = match_obj.group(1)

expansions = re.split(r"\s*\|\s*", match_obj.group(2))

for expansion in expansions:

expansion_list = expansion.split(" ")

self.add_rule(rule, expansion_list)

if __name__ == '__main__':

cfree = ContextFreeReader()

cfree.parse_from_file(open("test.grammar"))

expansion = cfree.get_expansion('S')

print ' '.join(expansion)

The first thing that happens in this method is a call to re.sub("#.*$", "", line): this will replace every # character followed by zero or more other characters (.*) up until the end of the line ($) with nothing.

The pattern passed into re.search on the next line is a little bit more complex. Here’s a reading:

(\w+)– The first group of characters I’m looking for is one or more alphanumeric characters*-> *– You’ll find that group just before you find the sequence of characters->, which may (or may not) be surrounded by some space characters.(.*)– The second group of characters I’m looking for comes after all of that. Actually, the second group is just whatever characters you find after*-> *—I’m not picky.

Then we create a match object match_obj with the results of that regular expression. If all goes according to plan, m.group(1) will have the non-terminal rule, and m.group(2) will have the expansion for that rule.

We still have one more thing we need to check for, though: multiple expansions on the same line. A call to re.split will split up the second group, using the pipe character as a delimiter. More specifically, \s*\|\s* translates to “a pipe character, maybe but not necessarily with whitespace to either side.” Why the backslash in front of the pipe character? Because the pipe character in a regular expression usually has a special meaning: alternation. The double backslash in front tells Python to ignore the special meaning and just look for a literal pipe character.

Finally, we loop through expansions and add them to our ContextFree object using add_rule. Call the program like this:

$ python context_filter_reader.py

… and get output like this:

oh, the bald corsage computes the important trombone

Exercises

- Augment

test.grammarto model some part of English syntax (or the syntax of some other language) that isn’t currently part of the grammar. Ideas: proper nouns, quotations, parenthetical remarks, adverbs, adverbial phrases, questions, etc. What’s easy to model, and what’s hard to model? Modify or extend the ContextFree class as necessary. - Build a grammar that generates something other than English sentences. For example: stories, images, sound, computer programs, other grammars, etc. Modify or extend the ContextFree class as appropriate.

- Advanced: Re-write the MarkovGenerator class to use recursion.

Reply

You must be logged in to post a comment.

No comments

Comments feed for this article

Trackback link: http://www.decontextualize.com/teaching/dwwp/topics-ii-recursion-and-context-free-grammars/trackback/