List comprehensions

When you’re programming computers, there frequently arises the need to create a list that is a copy of another list, except with certain elements modified or filtered. Normally, you’d write code following this pattern:

source = variable of type list

dest = list()

for item in source:

if condition:

dest.append(expression)

Python has a special syntactic structure called a list comprehension to condense this logic into one line:

dest = [expression for item in source if condition]

Where dest is the new list, source is the source list, expression is some Python expression using item, and condition is a Python expression that evaluates to true or false. The new list will contain whatever expression returns for each item in the list, sequentially, if the condition for that item evaluates to true. (The if clause is optional; if omitted, no items from the source list are skipped.)

(If you’re familiar with SQL, you can think of a list comprehension as being analogous to a SELECT statement: SELECT expression FROM source WHERE condition.)

A quick interactive session to demonstrate:

>>> [x*x for x in range(10)] # squares of numbers from 0 to 9 [0, 1, 4, 9, 16, 25, 36, 49, 64, 81] >>> [x*x for x in range(10) if x % 2 == 0] # squares of only even numbers from 0 to 9 [0, 4, 16, 36, 64] >>> words = ['it', 'was', 'the', 'best', 'of', 'times'] >>> [w.upper() for w in words] # copy of list words, but in upper case ['IT', 'WAS', 'THE', 'BEST', 'OF', 'TIMES'] >>> [w for w in words if len(w) == 3] # only those words with a length of 3 ['was', 'the'] >>> [w[0].upper() for w in words] # just the first character of each item in words ['I', 'W', 'T', 'B', 'O', 'T']

The advantage of list comprehensions is that they more elegantly express our intent.

Exercise: Re-write the following code to use a list comprehension.

names = ['Joe', 'Bob', 'Gerty', 'Ross', 'Andy', 'Mary']

mod_names = list()

for n in names:

mod_names.append("It's the " + n + "-meister!")

Example: adj__extractor.py

The following example depends on the existence of a file adjectives, available in the session11 folder. It looks at every line of standard input, extracts only those words that were in adjectives, and prints out those adjectives.

import sys

def load_adjectives():

adj = set()

for line in open('adjectives'):

line = line.strip()

adj.add(line)

return adj

adj_set = load_adjectives()

for line in sys.stdin:

line = line.strip()

adjs = [s for s in line.split(" ") if s.lower() in adj_set]

if len(adjs) > 0:

print ', '.join(adjs)

Everything in this file is familiar as an old friend, except for line 14, where we’re using a list comprehension. This comprehension translates as “give me every element in the list resulting from splitting the line, if converting that element to lower case yields as string in adj_set.” (Exercise: write this comprehension as a for loop.) Given lovecraft_dreams.txt as standard input, expect output like this:

snowy, painted distant new, last indifferent wonderful futile naked nebulous prosaic strange, enchanted golden, overhanging shadowy thick fragrant peaked, small waking fell unlit, crumbling glowing winged ...

Further reading: More list comprehension examples from the Python tutorial.

Text visualization: An exercise with Processing

In order to visualize text, we need some way to, well, make graphics. There is no shortage of ways to do this in Python (Nodebox is a good starting point, and Pyglet is an OpenFrameworks-esque OpenGL wrapper).

But I already know Processing, and it was built from the ground-up for the work of visualizing data. So often my workflow for doing text visualizations works like this: write a Python program to do the text analysis; output the resulting data in an easy-to-parse format; then read in the data with a Processing sketch, and use Processing to draw something based on the data.

Case in point: a simple word-count visualization. Here’s the Processing sketch, and here’s the Python code I used to do the analysis:

class SimpleConcordance(object):

def __init__(self):

self.token_count = dict()

self.token_total = 0

# for every space-separated token, strip off punctuation, convert to lower

# case, return as list

def tokenize(self, s):

return [s.lower().strip("!;:'\",.?") for s in s.split(" ")]

def feed(self, line):

tokens = self.tokenize(line)

for token in tokens:

self.token_total += 1

if token in self.token_count:

self.token_count[token] += 1

else:

self.token_count[token] = 1

def retrieve(self):

return self.token_count

def total(self):

return self.token_total

if __name__ == '__main__':

import sys

concord = SimpleConcordance()

for line in sys.stdin:

line = line.strip()

concord.feed(line)

count = concord.retrieve()

total = concord.total()

for token in count:

if count[token] > 1:

proportion = (count[token] / float(total)) * 1000

print str(proportion) + ":" + token

The SimpleConcordance class implements a basic word-count algorithm. It counts tokens in the strings we pass to it. (Note the list comprehension in the tokenize method!) When we’re done, we can get a dictionary whose items relate a token (key) to a count (value) with the retrieve method; we can also get the total number of tokens in the text with the total method.

The loop at the end of the program prints out a number for each token in the source text. This number is ratio of the word’s count to the total number of words in the text: a rough measure of how frequent the word is. (We multiply the number by 1000 to make it a bit easier to deal with.) The output of the program looks like this:

0.31650577623:meadows 0.31650577623:blanket 1.26602310492:every 0.31650577623:blisters 0.633011552461:succession 0.474758664346:whist 0.31650577623:cloudiness 0.31650577623:clothes 0.31650577623:surrounding 0.633011552461:second 0.31650577623:shining 0.949517328691:even 0.474758664346:solemn 0.633011552461:cooking 0.474758664346:new ...

Text processing with Processing

We can then write a simple Processing sketch which parses this data and displays it in an interesting way. Here is such a sketch (for certain values of “interesting”):

String[] lines;

PFont font;

void setup() {

size(800, 600);

font = createFont("Arial", 72);

lines = loadStrings("count-stein.txt");

}

void draw() {

background(255);

textFont(font);

fill(0);

for (int i = 0; i < lines.length; i++) {

String[] tmp = split(lines[i], ":");

float sizeFactor = float(tmp[0]);

String word = tmp[1];

textSize(4 + sizeFactor * 3);

text(word, random(width), random(height));

}

noLoop();

}

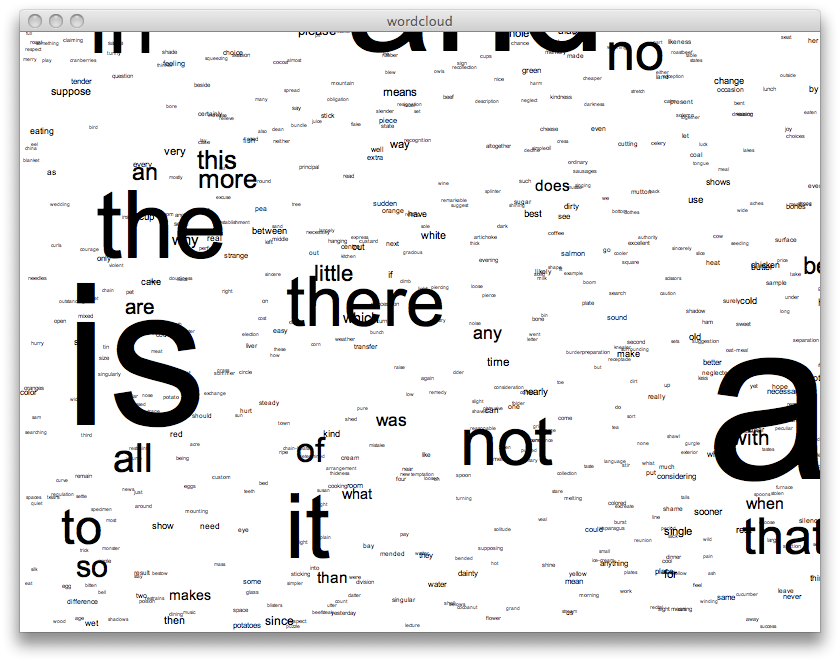

Discussion of the syntax here is beyond the scope of this class, but here’s the gist: we load an array of strings from a file (count-stein.txt in this case, the output of the concordance program above); we then loop over each of those lines, split it into its component parts, take the first part as a floating point number and the second part as a string. The number is then used to determine the size of the string, which gets drawn to the screen in a random place. Here’s a screenshot:

Further reading: Daniel Shiffman’s A2Z syllabus has lots of information on text processing in Java, with many examples that use Processing.

Python and the web: CGI

The simplest way to make your Python script available on the web is to use CGI (“common gateway interface”), a basic protocol that a web server can use to send input to a program on the server and get output from it. CGI is an aging standard, and has largely been replaced (especially for Python web applications) by more sophisticated techniques (like WSGI). Still, for simple cases, CGI is the way to go: it’s easy to understand and requires no external software and little server configuration. If you’re interested in serious web applications, though, you might look into some of the frameworks listed below.

Further reading:

- CodePoint Web Python Tutorial has good examples for CGI and WSGI.

- Django, web.py and Pylons are all good frameworks for building web applications with Python.

- You might also be interested in Google App Engine.

Requirements: server setup and code

In order to run Python programs as CGI scripts, your server must be set up correctly. Instructions for doing this are largely beyond the scope of this tutorial (if you’re using shared hosting, contact your help desk). In Apache, the following two directives, if placed in a <Directory> block or .htaccess file, should get things going:

Options ExecCGI AddHandler cgi-script .py

These lines tell Apache to view .py files as CGI scripts. When a client (such as a web browser) requests a file with a .py extension, Python will run the program and return its output.

Your program must meet the following requirements:

- The first line of the program must be #!/usr/bin/python (or whatever the path to Python is on your server); without this line, Apache won’t know how to run the program, and you’ll get an error.

- The permissions on the file must be correct; run chmod a+x your_script.py on the command line, or use your SFTP client to set the permissions such that all users can execute the program.

- Before your program produces any other output, it must print Content-Type: text/html followed by two newlines (e.g. print "Content-Type: text/html\n";). Without these two lines, Apache and the client’s web browser won’t be able to interpret your program’s output. (Other content types are valid, such as text/plain or application/xml, depending on what you’re program is outputting. Here’s a list of valid content types.)

Your program must not:

- Use any user-supplied data to operate on the server’s file system. Providing a field that (e.g.) would allow the user to open the file named by the field is a bad idea: it opens the door to security problems. If you must use user-supplied data in this manner, carefully validate and filter it first.

- Display any user input to output, without quoting it first. Displaying unfiltered user-supplied data is an easy way to facilitate cross-site scripting attacks (more information). Always use the cgi module’s escape method to convert HTML in the user’s input to plain text.

- Write to files on the server. If your application is sophisticated enough to require storing user data, you’d be better off using a database, which will provide better structure, performance and security than storing data in flat files.

Two quick examples

First, a random sonnet script. Available here.

#!/usr/bin/python

import cgi

import cgitb

cgitb.enable()

import markov_by_char

mark = markov_by_char.CharacterMarkovGenerator(7, 80)

for line in open("/home/aparrish/texts/sonnets.txt"):

mark.feed(line.strip())

print """Content-Type: text/html

<html>

<head>

<title>Test</title>

</head>

<body>

<h1>A Sonnet.</h1>

"""

for i in range(14):

print mark.generate()

print "<br/>"

print """</body>

</html>"""

You’ll notice there’s nothing particularly special about this program: it uses the CharacterMarkovGenerator class to generate markov-chain text from a given file, then prints it out. There’s a small amount of HTML surrounding the output, and the required Content-Type line, but otherwise nothing out of the ordinary. We use the cgi module, but only to use cgitb.

Getting data back from the user is moderately more complicated. This program allows the user to provide the source text for the Markov chain and specify the order of the n-gram and the number of lines to generate. It does so using the cgi module’s FieldStorage method, which returns a dictionary-like object with whatever information the user submitted to the program.

If there’s been any information submitted to the script, either using POST or GET (from, e.g., a form submission), then the program displays the Markov chain output. Otherwise, it displays a form to solicit this information:

#!/usr/bin/python

import cgi

import cgitb

cgitb.enable()

import markov_by_char

print """Content-Type: text/html

<html>

<head>

<title>Test</title>

</head>

<body>

<h1>Markov Chain: BYOT.</h1>

"""

# if user supplied input...

form = cgi.FieldStorage()

if len(form) > 0:

n = int(form.getfirst('n'))

linecount = int(form.getfirst('linecount'))

mark = markov_by_char.CharacterMarkovGenerator(n, 255)

for line in form.getfirst('sourcetext').split("\n"):

mark.feed(line)

for i in range(linecount):

print mark.generate()

print "<br/>"

# if no input supplied

else:

print """

<form method="POST">

N-gram order: <input type="text" name="n"/><br/>

Lines to generate: <input type="text" name="linecount"/><br/>

Source text:<br/>

<textarea rows=15 cols=65 name="sourcetext"></textarea><br/>

<input type="submit" value="Generate!"/>

</form>"""

print """</body>

</html>"""

Reply

You must be logged in to post a comment.

No comments

Comments feed for this article

Trackback link: http://www.decontextualize.com/teaching/dwwp/topics-iii-miscellaneous/trackback/