Here’s a list of links to student projects made for Reading and Writing Electronic Text, along with a brief description. I thought all of my students did great work this year, and it was a pleasure to teach them!

Centrality by Aankit Patel. I guess I could describe this as “lexicographical dataglitch-punk.” There is an online version available.

Bing Huang‘s TextFinal is an audiovisual meditation on the Declaration of Independence.

Caitlin Weaver produced Susan Scratched, a poem glitched through with a distinctive kind of repetition. A lovely performance of the poem is available on SoundCloud.

Clara Santamaria Vargas made Gertrude’STime, which takes the phrase “in the morning there is meaning, in the evening there is feeling” from Tender Buttons literally, and generates poems accordingly.

Dan Melancon‘s L SYSTEM POEM SYSTEM POEM L POEM L SYSTEM does what it says in the title: applies a Lindenmayer System to an input text. The output exhibits a strangely hypnotic form of uncanny alien repetition.

Eamon O’Connor’s final project uses the CMU pronouncing dictionary to produce metrical verse. Several examples are included on the page.

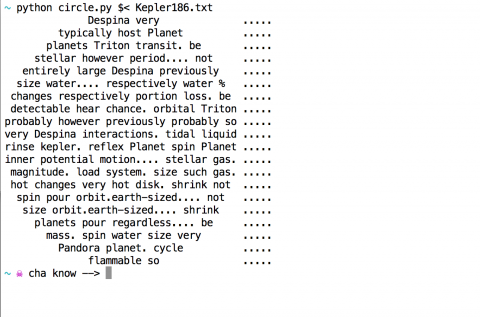

Hellyn Teng‘s final project was Kepler-186, a multimedia poem about exoplanets and home economics. Documentation includes sound snippets and screenshots.

Jason Sigal‘s write-up of his final project, The Phrases and Pronunciation is fantastic—he goes into detail about his process and the technical and conceptual decisions that he made along the way.

@mfilippino Marriage is a mouth oil of going on together that requires cork; edge means that there is a holding on to quest.

— Rambling Taxidermist (@TaxidermyRamble) May 22, 2014

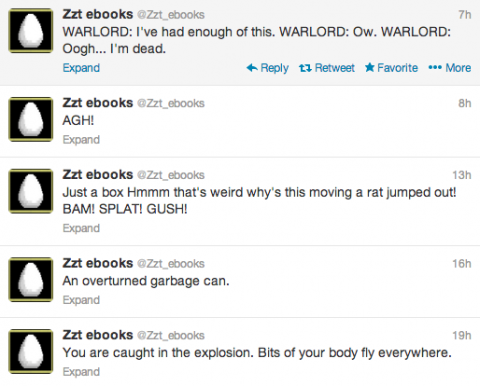

John Ferrell made a Twitter bot called the Rambling Taxidermist, whose inspiration and inner workings he has written up here. The bot responds to tweets about marriage with ill-advised, mashed-up advice composed partially from a taxidermy handbook.

Michelle Chandra created a lovely poem about loneliness, drawing upon a corpus of well-known quotes. I love the repetition and alliteration in this one, well worth reading.

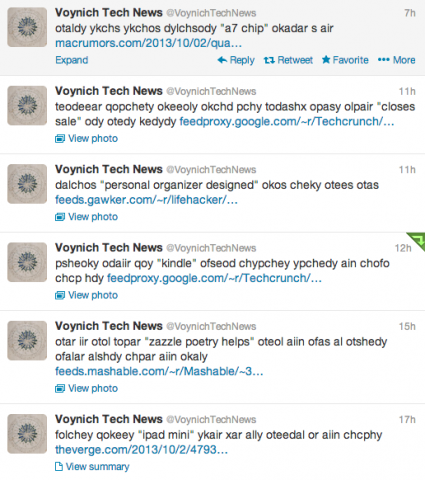

Ran Mo‘s Birdy News juxtaposes Twitter jokes with NY Times to often humorous effect.

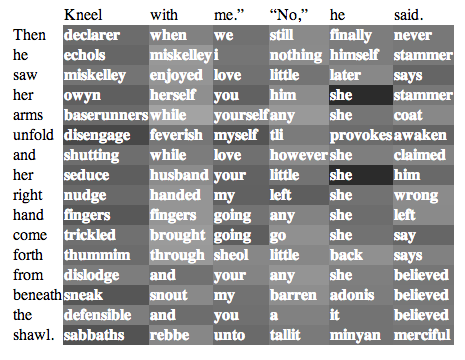

Robert Dionne‘s final project, Reading Between the Lines, generates multidimensional poems from distributed word vectors.

Industries must never go everywhere.

— everything always (@_all_of_us_) May 27, 2014

Salem Al-Mansoori‘s final project, @_all_of_us, is a machine for creating random platitudes and aphorisms. It takes the form of a Twitter bot and a generative comic strip.

Sam Lavigne‘s program to Transform any text into a patent application has attracted a lot of press attention, so you might have already seen it! Original and well-executed. I love this project.

Sheri Manson created a series of three poems, based on words and phrases drawn randomly from an interesting selection of source texts.

Uttam Grandhi‘s project The Baptized Pixel uses image data to generate poems with binary-like repetition.

Recent silence of NSA doing good works.

— One True Wise Man (@onetruewiseman) May 25, 2014

Vicci Ho made a Twitter bot, @onetruewiseman, that combines the social media wisdom of several conservative luminaries.